Tech

VideoUnderstanding

PYLER

NVIDIA AI Day Seoul 2025 Recap: PYLER’s AI Stack for Video Understanding

2025. 12. 12.

NVIDIA AI Day Seoul 2025: The AI Stack Perspective

The main takeaway from NVIDIA AI Day Seoul 2025 was straightforward: in production, a strong model by itself isn’t enough. What matters is having an AI stack that can run it reliably, keep it healthy, and update it as the service evolves. NVIDIA stressed this because AI development is moving faster than ever—models turn over more quickly, training and fine-tuning cycles are shorter, and inference is shifting from single calls to multi-model, multi-step pipelines. So a model is no longer something you train once and deploy. It’s a production system you have to operate and improve continuously.

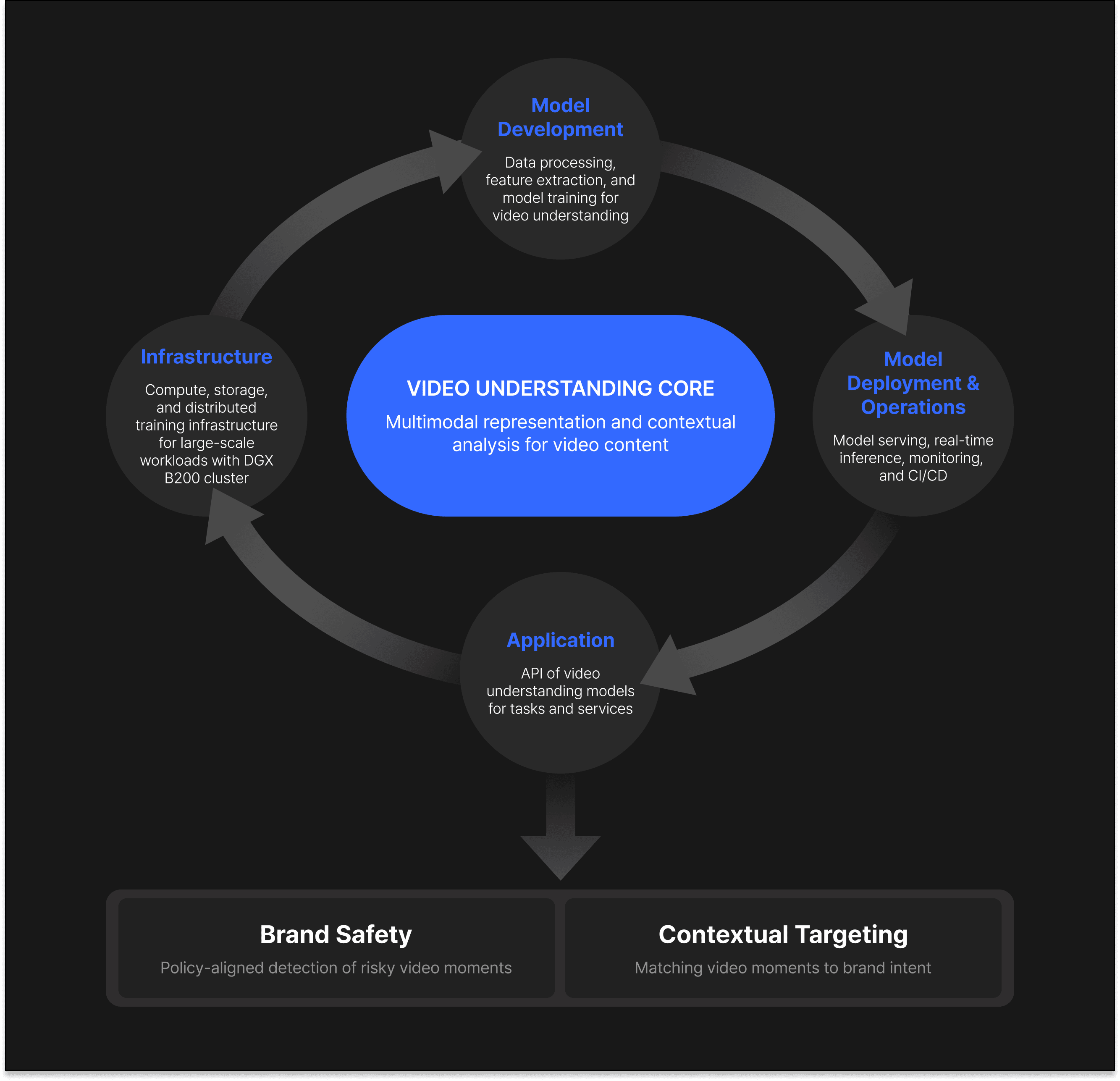

That’s why NVIDIA presented an end-to-end view of the AI stack—DGX clusters for infrastructure, NeMo for model development, NIM for deployment and operations, and Blueprints for applications. The key point is that these layers work as one continuous loop: train → deploy → monitor → update. PYLER sees this loop as foundational for video understanding in advertising.

Why the AI Stack Matters for PYLER’s Video Understanding in Advertising

That message came through just as clearly in PYLER’s session, “PYLER Builds Brand-Safe Multi-Modal AI on the NVIDIA DGX Blackwell Cluster.” CEO Jaeho Oh emphasized that multimodal video understanding in advertising creates real value only when it runs on a continuous loop across the AI stack—one that can absorb new content, policy changes, and emerging risks. In PYLER’s early product–market fit stage, the customer base and policy requirements were relatively simple, so a single trained model with lightweight operations could sustain Brand Safety and Contextual Targeting. He pointed out that this assumption no longer holds in today’s market.

User-generated content (UGC) and AI-generated content (AIGC) keep changing what shows up in video, and at the same time platform policies, regulations, and brand standards keep moving. In this environment, it is no longer enough to train a strong model and consider the job done. The real challenge is to keep the model and its policy criteria evolving together as the market shifts. If that loop across the AI stack falls behind, the consequences show up immediately—unsafe adjacency, unnecessary blocking, wasted spend, and brand risk. That’s why PYLER treats this continuous loop as table stakes for video understanding in advertising, and stays focused on operating the video understanding core underneath every downstream decision. Watch the session below for more details.

PYLER Session @ NVIDIA AI Day Seoul 2025—“PYLER Builds Brand-Safe Multi-Modal AI on the NVIDIA DGX Blackwell Cluster”

Where PYLER Applies the AI Stack: The Video Understanding Core

For PYLER, the loop across the AI stack is designed to keep the video understanding core operable in production. This core provides multimodal interpretation of video content and context. When the core stays stable while adapting through the loop, downstream ad decisions, such as Brand Safety and Contextual Targeting, can scale reliably.

In production, new UGC/AIGC patterns continually introduce out-of-distribution and borderline cases. When the online video understanding model classifies a segment as uncertain, the service routes that case to a high-fidelity multimodal large language model (MLLM) for policy-aware review. The MLLM re-evaluates the segment against policy prompts, assigns severity, and generates explicit evidence. These judgments, coupled with live operational telemetry, feed directly into data curation and fine-tuning, enabling rapid retraining and redeployment. This continuous feedback loop keeps the video understanding core aligned with content and policy shifts—this is the AI stack in production at PYLER.

To keep this loop fast enough under real service conditions, PYLER runs the pipeline on a B200-based DGX Blackwell cluster. Compared with an A100-based DGX setup, data preprocessing runs up to 4x faster and inference throughput is roughly 3x higher. This end-to-end acceleration allows for more frequent model and policy updates, so changes are reflected quickly in live decisions.

The PYLER AI stack keeps the video understanding core evolving—so Brand Safety and Contextual Targeting stay reliable in production.

How PYLER Brings Video Understanding into Production

Within the AI stack, the application layer is where video understanding has to prove itself: the core’s outputs need to drive real-time service decisions, rather than remain offline model scores. PYLER is building that bridge through ongoing collaboration with NVIDIA’s NeMo and VSS (Video Search & Summarization) teams, using VSS as the pipeline where it validates and operates its own video understanding use cases.

In this setup, each video is segmented over time into semantic moments, then embedded, indexed, and retrieved as the core input to downstream decisions. Each moment fuses visual, audio, and text into a service-ready representation of what happened, when it happened, and in what context, with explicit evidence and contextual attributes that map directly to brand and policy rules. These service-ready moments plug straight into operations: Brand Safety applies enforcement on risky moments with clear justification, while Contextual Targeting uses brand-aligned moments as inputs to recommendation and re-ranking.

This end-to-end flow extends the AI stack loop into the application layer, turning adaptive understanding into operational decisions and feeding outcomes back into continuous refinement.

Conclusion: Video Understanding on the AI Stack

Taken together, NVIDIA AI Day Seoul 2025 and PYLER’s session point to the same conclusion: in production, AI only works when it’s operated as a system, not just shipped as a model. NVIDIA’s AI stack view—spanning infrastructure, model development, deployment, and applications—lines up with how PYLER runs its own video understanding core: as something that is continuously monitored, updated, and pushed back into real services for Brand Safety and Contextual Targeting in video advertising.

This post recapped that shared perspective and outlined how PYLER applies the AI stack to keep its video understanding core evolving. In the next series of this post, we’ll go deeper into the core itself: how video is segmented into semantic moments with VSS, how temporal meaning is tracked over time, and how those representations are wired into PYLER’s real use cases for Brand Safety and Contextual Targeting.

This piece was written by Minseok Lee, ML Engineer at PYLER.